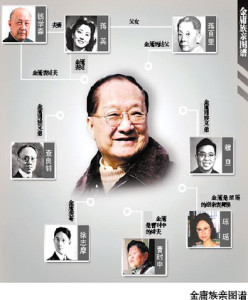

常言道︰『一圖勝千言』,此圖

或許容易『考證』,想那紀曉嵐寫『閱微草堂筆記』二十四卷,卻尋不著這段『史實』︰

【俄國使節】

我俄人,騎奇馬,張長弓 ,

單戈成戰 ;

琴瑟琵琶八大王 ,王王在上。

【紀曉嵐】

爾人你 ,襲龍衣 ,偽為人 ,

合手即拿;

魑魅魍魎四小鬼 ,鬼鬼在邊。

它載之於《幽默心理學: 思考與研究》,可能來自《 紀曉嵐傳奇》 ,雖早想『存疑』忘卻,無奈金庸說,這個『絕對』出自『黃蓉』之父『東邪黃藥師』︰

琴瑟琵琶,八大王一般頭面

魑魅魍魎,四小鬼各自肚腸

卻又似出於『司馬翎』之筆,後有『查良鏞』先生『修訂』版本,自己去『華山論劍』,以圖小說有個『合情依理』的…,只得

【橫批】

性感情忘,四季心總苦計量。

心初生,其心咸,青心賁,恐心亡。

A Void, translated from the original French La Disparition (literally, “The Disappearance”), is a 300-page French lipogrammatic novel, written in 1969 by Georges Perec, entirely without using the letter e (except for the author’s name), following Oulipo constraints.

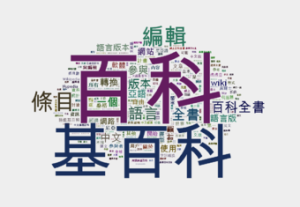

維基百科的字詞分布情況,字型越大,代表該字詞出現的機率就越大。

第九世紀阿拉伯博學家肯迪所著之《手稿上破譯加密消息》

《福爾摩斯‧歸來記》中

《跳舞的人》,解碼是『Never』

試問『人文』與『科技』的距離有多遠呢?如果有『人文科技』的『名目』,它是用著『人文』去『裝飾』『科技』,還是將以『科技』來『研究』『人文』 ??據聞有一種『質的研究』和『量的統計』恰成『對比』!!

一本沒有『字母』 e 的書,能有什麼『奇怪』的嗎?因為這不符合於一般『文本』之『字母』的『常態分佈』!還是說『另有隱情』刻意為之的呢??或是種『文字遊戲』,就像德國詩人和劇作家席勒提出『遊戲』之理論。席勒說:『只有當人充分是人的時候,他才遊戲;只有當人遊戲的時候,他才完全是人。』。或許人類在生活中勢必受到『精神』與『物質』的雙重『束縛』,因而喪失了『理想』和『自由』。於是人們假借剩下的精力打造一個『自在』的國度,這就是『遊戲』。此種『創造性』活動,源自人類的『本能』。

那麼『字詞頻率分析』與『真偽之作考據』會有關嗎?一個時代『流行』那個時代的『語詞』,一位作家有著自己『偏愛』的某些『詞彙』,這就構成了『特定』時空人物下之『字詞使用頻率』,因而可以用它來『探究』『真偽』!或許也正是『 W!o 』為難之處,想用這個『時代言語』去表述那些『未來之事』的吧!!

【齊夫定律】── 實驗顯示

Zipf’s law states that given some corpus of natural language utterances, the frequency of any word is inversely proportional to its rank in the frequency table. Thus the most frequent word will occur approximately twice as often as the second most frequent word, three times as often as the third most frequent word, etc. For example, in the Brown Corpus of American English text, the word “the” is the most frequently occurring word, and by itself accounts for nearly 7% of all word occurrences (69,971 out of slightly over 1 million).

就讓我們安裝用『派生』寫的『自然語言工具箱』 Natural Language Toolkit , 玩味一下『科技』之於『人文』!

【 Installing NLTK 】

‧Install Setuptools: http://pypi.python.org/pypi/setuptools

‧Install Pip: run sudo easy_install pip

※Install Numpy (optional): run sudo pip install -U numpy

‧Install NLTK: run sudo pip install -U nltk

‧Test installation: run python then type import nltk

思索『人機界面』的未來!!

Natural Language Toolkit

NLTK is a leading platform for building Python programs to work with human language data. It provides easy-to-use interfaces to over 50 corpora and lexical resources such as WordNet, along with a suite of text processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning, and an active discussion forum.

Thanks to a hands-on guide introducing programming fundamentals alongside topics in computational linguistics, NLTK is suitable for linguists, engineers, students, educators, researchers, and industry users alike. NLTK is available for Windows, Mac OS X, and Linux. Best of all, NLTK is a free, open source, community-driven project.

NLTK has been called “a wonderful tool for teaching, and working in, computational linguistics using Python,” and “an amazing library to play with natural language.”

Natural Language Processing with Python provides a practical introduction to programming for language processing. Written by the creators of NLTK, it guides the reader through the fundamentals of writing Python programs, working with corpora, categorizing text, analyzing linguistic structure, and more. The book is being updated for Python 3 and NLTK 3. (The original Python 2 version is still available at http://nltk.org/book_1ed.)

【 Python 2.X 安裝】

# 安裝『自然語言工具庫』 nltk Natural Language Toolkit # 安裝 python-setuptools pi@raspberrypi ~sudo apt-get install python-setuptools 正在讀取套件清單... 完成 正在重建相依關係 正在讀取狀態資料... 完成 下列【新】套件將會被安裝: python-setuptools 升級 0 個,新安裝 1 個,移除 0 個,有 0 個未被升級。 需要下載 449 kB 的套件檔。 此操作完成之後,會多佔用 1,120 kB 的磁碟空間。 下載:1 http://mirrordirector.raspbian.org/raspbian/ wheezy/main python-setuptools all 0.6.24-1 [449 kB] 取得 449 kB 用了 1s (335 kB/s) 選取了原先未選的套件 python-setuptools。 (讀取資料庫 ... 目前共安裝了 181285 個檔案和目錄。) 解開 python-setuptools(從 .../python-setuptools_0.6.24-1_all.deb)... 設定 python-setuptools (0.6.24-1) ... # 安裝 pip pi@raspberrypi ~

sudo pip install -U nltk /usr/local/lib/python2.7/dist-packages/pip-6.1.1-py2.7.egg/pip/_vendor/requests/packages/urllib3/util/ssl_.py:79: InsecurePlatformWarning: A true SSLContext object is not available. This prevents urllib3 from configuring SSL appropriately and may cause certain SSL connections to fail. For more information, see https://urllib3.readthedocs.org/en/latest/security.html#insecureplatformwarning. InsecurePlatformWarning Collecting nltk /usr/local/lib/python2.7/dist-packages/pip-6.1.1-py2.7.egg/pip/_vendor/requests/packages/urllib3/util/ssl_.py:79: InsecurePlatformWarning: A true SSLContext object is not available. This prevents urllib3 from configuring SSL appropriately and may cause certain SSL connections to fail. For more information, see https://urllib3.readthedocs.org/en/latest/security.html#insecureplatformwarning. InsecurePlatformWarning Downloading nltk-3.0.2.tar.gz (991kB) 100% |████████████████████████████████| 991kB 58kB/s Installing collected packages: nltk Running setup.py install for nltk Successfully installed nltk-3.0.2 pi@raspberrypi ~

sudo apt-get install python3-pip pi@raspberrypi ~

wget https://pypi.python.org/packages/source/n/nltk/nltk-3.0.2.tar.gz#md5=022e3e19b17ddabc0026bf0c8ce62dcf pi@raspberrypi ~

cd nltk-3.0.2/ sudo python3 setup.py install

【安裝測試】

pi@raspberrypi ~ $ python3

Python 3.2.3 (default, Mar 1 2013, 11:53:50)

[GCC 4.6.3] on linux2

Type "help", "copyright", "credits" or "license" for more information.

# 導入 nltk

>>> import nltk

>>> sentence = """At eight o'clock on Thursday morning

... Arthur didn't feel very good."""

# 沒有下載 punkt

>>> tokens = nltk.word_tokenize(sentence)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/lib/python3.2/dist-packages/nltk-3.0.2-py3.2.egg/nltk/tokenize/__init__.py", line 101, in word_tokenize

return [token for sent in sent_tokenize(text, language)

File "/usr/local/lib/python3.2/dist-packages/nltk-3.0.2-py3.2.egg/nltk/tokenize/__init__.py", line 85, in sent_tokenize

tokenizer = load('tokenizers/punkt/{0}.pickle'.format(language))

File "/usr/local/lib/python3.2/dist-packages/nltk-3.0.2-py3.2.egg/nltk/data.py", line 781, in load

opened_resource = _open(resource_url)

File "/usr/local/lib/python3.2/dist-packages/nltk-3.0.2-py3.2.egg/nltk/data.py", line 895, in _open

return find(path_, path + ['']).open()

File "/usr/local/lib/python3.2/dist-packages/nltk-3.0.2-py3.2.egg/nltk/data.py", line 624, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource 'tokenizers/punkt/PY3/english.pickle' not found.

Please use the NLTK Downloader to obtain the resource: >>>

nltk.download()

Searched in:

- '/home/pi/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- ''

**********************************************************************

# 下載 punkt

>>> nltk.download()

NLTK Downloader

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

Downloader> d

Download which package (l=list; x=cancel)?

Identifier> punkt

Downloading package punkt to /home/pi/nltk_data...

Unzipping tokenizers/punkt.zip.

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

Downloader> q

True

# 再次執行

>>> tokens = nltk.word_tokenize(sentence)

>>> tokens

['At', 'eight', "o'clock", 'on', 'Thursday', 'morning', 'Arthur', 'did', "n't", 'feel', 'very', 'good', '.']

# 缺少 maxent_treebank_pos_tagger

>>> tagged = nltk.pos_tag(tokens)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/lib/python3.2/dist-packages/nltk-3.0.2-py3.2.egg/nltk/tag/__init__.py", line 103, in pos_tag

tagger = load(_POS_TAGGER)

File "/usr/local/lib/python3.2/dist-packages/nltk-3.0.2-py3.2.egg/nltk/data.py", line 781, in load

opened_resource = _open(resource_url)

File "/usr/local/lib/python3.2/dist-packages/nltk-3.0.2-py3.2.egg/nltk/data.py", line 895, in _open

return find(path_, path + ['']).open()

File "/usr/local/lib/python3.2/dist-packages/nltk-3.0.2-py3.2.egg/nltk/data.py", line 624, in find

raise LookupError(resource_not_found)

LookupError:

**********************************************************************

Resource 'taggers/maxent_treebank_pos_tagger/PY3/english.pickle'

not found. Please use the NLTK Downloader to obtain the

resource: >>> nltk.download()

Searched in:

- '/home/pi/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

- ''

**********************************************************************

# 表列套件及下載

>>> nltk.download()

NLTK Downloader

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

Downloader> l

Packages:

[ ] abc................. Australian Broadcasting Commission 2006

[ ] alpino.............. Alpino Dutch Treebank

[ ] basque_grammars..... Grammars for Basque

[ ] biocreative_ppi..... BioCreAtIvE (Critical Assessment of Information

Extraction Systems in Biology)

[ ] bllip_wsj_no_aux.... BLLIP Parser: WSJ Model

[ ] book_grammars....... Grammars from NLTK Book

[ ] brown............... Brown Corpus

[ ] brown_tei........... Brown Corpus (TEI XML Version)

[ ] cess_cat............ CESS-CAT Treebank

[ ] cess_esp............ CESS-ESP Treebank

[ ] chat80.............. Chat-80 Data Files

[ ] city_database....... City Database

[ ] cmudict............. The Carnegie Mellon Pronouncing Dictionary (0.6)

[ ] comtrans............ ComTrans Corpus Sample

[ ] conll2000........... CONLL 2000 Chunking Corpus

[ ] conll2002........... CONLL 2002 Named Entity Recognition Corpus

[ ] conll2007........... Dependency Treebanks from CoNLL 2007 (Catalan

and Basque Subset)

[ ] crubadan............ Crubadan Corpus

Hit Enter to continue: l

[ ] dependency_treebank. Dependency Parsed Treebank

[ ] europarl_raw........ Sample European Parliament Proceedings Parallel

Corpus

[ ] floresta............ Portuguese Treebank

[ ] framenet_v15........ FrameNet 1.5

[ ] gazetteers.......... Gazeteer Lists

[ ] genesis............. Genesis Corpus

[ ] gutenberg........... Project Gutenberg Selections

[ ] hmm_treebank_pos_tagger Treebank Part of Speech Tagger (HMM)

[ ] ieer................ NIST IE-ER DATA SAMPLE

[ ] inaugural........... C-Span Inaugural Address Corpus

[ ] indian.............. Indian Language POS-Tagged Corpus

[ ] jeita............... JEITA Public Morphologically Tagged Corpus (in

ChaSen format)

[ ] kimmo............... PC-KIMMO Data Files

[ ] knbc................ KNB Corpus (Annotated blog corpus)

[ ] large_grammars...... Large context-free and feature-based grammars

for parser comparison

[ ] lin_thesaurus....... Lin's Dependency Thesaurus

[ ] mac_morpho.......... MAC-MORPHO: Brazilian Portuguese news text with

part-of-speech tags

Hit Enter to continue: l

[ ] machado............. Machado de Assis -- Obra Completa

[ ] masc_tagged......... MASC Tagged Corpus

[ ] maxent_ne_chunker... ACE Named Entity Chunker (Maximum entropy)

[ ] maxent_treebank_pos_tagger Treebank Part of Speech Tagger (Maximum entropy)

[ ] moses_sample........ Moses Sample Models

[ ] movie_reviews....... Sentiment Polarity Dataset Version 2.0

[ ] names............... Names Corpus, Version 1.3 (1994-03-29)

[ ] nombank.1.0......... NomBank Corpus 1.0

[ ] nps_chat............ NPS Chat

[ ] oanc_masc........... Open American National Corpus: Manually

Annotated Sub-Corpus

[ ] omw................. Open Multilingual Wordnet

[ ] paradigms........... Paradigm Corpus

[ ] pe08................ Cross-Framework and Cross-Domain Parser

Evaluation Shared Task

[ ] pil................. The Patient Information Leaflet (PIL) Corpus

[ ] pl196x.............. Polish language of the XX century sixties

[ ] ppattach............ Prepositional Phrase Attachment Corpus

[ ] problem_reports..... Problem Report Corpus

[ ] propbank............ Proposition Bank Corpus 1.0

[ ] ptb................. Penn Treebank

Hit Enter to continue: l

[*] punkt............... Punkt Tokenizer Models

[ ] qc.................. Experimental Data for Question Classification

[ ] reuters............. The Reuters-21578 benchmark corpus, ApteMod

version

[ ] rslp................ RSLP Stemmer (Removedor de Sufixos da Lingua

Portuguesa)

[ ] rte................. PASCAL RTE Challenges 1, 2, and 3

[ ] sample_grammars..... Sample Grammars

[ ] semcor.............. SemCor 3.0

[ ] senseval............ SENSEVAL 2 Corpus: Sense Tagged Text

[ ] sentiwordnet........ SentiWordNet

[ ] shakespeare......... Shakespeare XML Corpus Sample

[ ] sinica_treebank..... Sinica Treebank Corpus Sample

[ ] smultron............ SMULTRON Corpus Sample

[ ] snowball_data....... Snowball Data

[ ] spanish_grammars.... Grammars for Spanish

[ ] state_union......... C-Span State of the Union Address Corpus

[ ] stopwords........... Stopwords Corpus

[ ] swadesh............. Swadesh Wordlists

[ ] switchboard......... Switchboard Corpus Sample

[ ] tagsets............. Help on Tagsets

Hit Enter to continue: l

[ ] timit............... TIMIT Corpus Sample

[ ] toolbox............. Toolbox Sample Files

[ ] treebank............ Penn Treebank Sample

[ ] udhr2............... Universal Declaration of Human Rights Corpus

(Unicode Version)

[ ] udhr................ Universal Declaration of Human Rights Corpus

[ ] unicode_samples..... Unicode Samples

[ ] universal_tagset.... Mappings to the Universal Part-of-Speech Tagset

[ ] universal_treebanks_v20 Universal Treebanks Version 2.0

[ ] verbnet............. VerbNet Lexicon, Version 2.1

[ ] webtext............. Web Text Corpus

[ ] wordnet............. WordNet

[ ] wordnet_ic.......... WordNet-InfoContent

[ ] words............... Word Lists

[ ] ycoe................ York-Toronto-Helsinki Parsed Corpus of Old

English Prose

Collections:

[ ] all-corpora......... All the corpora

[P] all................. All packages

[P] book................ Everything used in the NLTK Book

([*] marks installed packages; [P] marks partially installed collections)

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

Downloader> d

Download which package (l=list; x=cancel)?

Identifier> maxent_treebank_pos_tagger

Downloading package maxent_treebank_pos_tagger to

/home/pi/nltk_data...

Unzipping taggers/maxent_treebank_pos_tagger.zip.

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

Downloader> q

True

# 重新執行

>>> tagged = nltk.pos_tag(tokens)

>>> tagged[0:6]

[('At', 'IN'), ('eight', 'CD'), ("o'clock", 'JJ'), ('on', 'IN'), ('Thursday', 'NNP'), ('morning', 'NN')]

>>>