莎士比亞之《哈姆雷特》劇裡,有句名言︰

To be or not to be, that is the question

。雖說 To be 未必不『悽慘』,Not to be 未必然 『落魄』,奈何只剩下悲哀可選的耶?回顧『民主國家』與『精英政治』之發展史,唯有唏噓乎??如果

過去英國的哲學家與數學家羅素非常不相信『少數服從多數』的『民主投票』,將它戲稱為『呆子選驢子』之事。或許羅素他比較相信『柏拉圖』的『理想國』,有一位『睿智的哲學家』皇帝,自然就能『選賢用能』達到『天下大治』。他設想假使從『機率』的觀點來觀察,一國的眾人『一人一票』的投票是否是就能夠達成這麽一個選賢用能『結果』的呢?也許可以講天底下人的『數量』就真的一定能夠產生人的『素質』的嗎?如果考之於『人的歷史』這大概也是『不太可能』的事情的吧??

那麼

過後不久美國的哲學家和教育家約翰‧杜威 John Dewey 回應以『民主的基石在教育』,並且在他的著名的大作《民主與教育》中明確的表示『教育的目的是要讓每個人能夠繼續他的教育……請不要在此教育目的以外,再去尋覓其他的目的,更別把教育當做某種特定目的之附屬品。』 。然而這個世界是否已經走上了杜威所講的『教育正軌』的呢??

─── 摘自《公平與正義!!》

因此

卷積神經網絡

卷積神經網絡(Convolutional Neural Network, CNN)是一種前饋神經網絡,它的人工神經元可以響應一部分覆蓋範圍內的周圍單元 ,[1]對於大型圖像處理有出色表現。

卷積神經網絡由一個或多個卷積層和頂端的全連通層(對應經典的神經網絡)組成,同時也包括關聯權重和池化層(pooling layer)。這一結構使得卷積神經網絡能夠利用輸入數據的二維結構。與其他深度學習結構相比,卷積神經網絡在圖像和語音識別方面能夠給出更優的結果。這一模型也可以使用反向傳播算法進行訓練。相比較其他深度、前饋神經網絡,卷積神經網絡需要估計的參數更少,使之成為一種頗具吸引力的深度學習結構[2]。

───

能夠『訓練』且『辨識』的這麼好!又為什麼呢??難到『數量』真可決定『素質』耶!!

但思『特色』 何謂也?象中有數。『計算』何謂也??數中有象。豈非是『物以類聚、數以群分』的嗎!!??

故而以『![]() 』能字立論,卷積神經網絡取法於『生物之眼』,將有所見『

』能字立論,卷積神經網絡取法於『生物之眼』,將有所見『![]() 』為乎??!!

』為乎??!!

造字者言︰『能』,熊之屬,『為』,象之類。皆是有力之大物也 。有『為』有『能』方可『利生』也。假使

想者入非非,不想者無所為。

再缺乏能者將何以哉???

如果有人精通『反向傳播算法』

Neural Networks – A Systematic Introduction

a book by Raul Rojas

Foreword by Jerome Feldman

Springer-Verlag, Berlin, New-York, 1996 (502 p.,350 illustrations).

,且將之用於『社會鄉野』的網絡,或可推知『人民憤怒』之逆向傳播終將『形塑』該網絡,激起一次次滔天巨浪吧!!!

與其惶惑不安,莫若順其自然,潛心觀法

CS231n: Convolutional Neural Networks for Visual Recognition.

Table of Contents:

- Architecture Overview

- ConvNet Layers

- ConvNet Architectures

- Layer Patterns

- Layer Sizing Patterns

- Case Studies (LeNet / AlexNet / ZFNet / GoogLeNet / VGGNet)

- Computational Considerations

- Additional References

Convolutional Neural Networks (CNNs / ConvNets)

Convolutional Neural Networks are very similar to ordinary Neural Networks from the previous chapter: they are made up of neurons that have learnable weights and biases. Each neuron receives some inputs, performs a dot product and optionally follows it with a non-linearity. The whole network still expresses a single differentiable score function: from the raw image pixels on one end to class scores at the other. And they still have a loss function (e.g. SVM/Softmax) on the last (fully-connected) layer and all the tips/tricks we developed for learning regular Neural Networks still apply.

So what does change? ConvNet architectures make the explicit assumption that the inputs are images, which allows us to encode certain properties into the architecture. These then make the forward function more efficient to implement and vastly reduce the amount of parameters in the network.

Architecture Overview

Recall: Regular Neural Nets. As we saw in the previous chapter, Neural Networks receive an input (a single vector), and transform it through a series of hidden layers. Each hidden layer is made up of a set of neurons, where each neuron is fully connected to all neurons in the previous layer, and where neurons in a single layer function completely independently and do not share any connections. The last fully-connected layer is called the “output layer” and in classification settings it represents the class scores.

Regular Neural Nets don’t scale well to full images. In CIFAR-10, images are only of size 32x32x3 (32 wide, 32 high, 3 color channels), so a single fully-connected neuron in a first hidden layer of a regular Neural Network would have 32*32*3 = 3072 weights. This amount still seems manageable, but clearly this fully-connected structure does not scale to larger images. For example, an image of more respectible size, e.g. 200x200x3, would lead to neurons that have 200*200*3 = 120,000 weights. Moreover, we would almost certainly want to have several such neurons, so the parameters would add up quickly! Clearly, this full connectivity is wasteful and the huge number of parameters would quickly lead to overfitting.

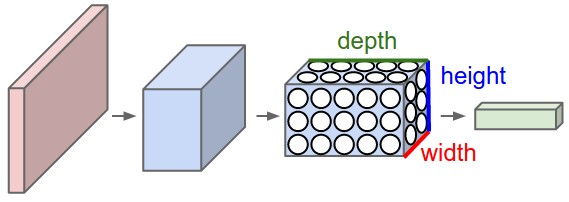

3D volumes of neurons. Convolutional Neural Networks take advantage of the fact that the input consists of images and they constrain the architecture in a more sensible way. In particular, unlike a regular Neural Network, the layers of a ConvNet have neurons arranged in 3 dimensions: width, height, depth. (Note that the word depth here refers to the third dimension of an activation volume, not to the depth of a full Neural Network, which can refer to the total number of layers in a network.) For example, the input images in CIFAR-10 are an input volume of activations, and the volume has dimensions 32x32x3 (width, height, depth respectively). As we will soon see, the neurons in a layer will only be connected to a small region of the layer before it, instead of all of the neurons in a fully-connected manner. Moreover, the final output layer would for CIFAR-10 have dimensions 1x1x10, because by the end of the ConvNet architecture we will reduce the full image into a single vector of class scores, arranged along the depth dimension. Here is a visualization:

───

傾耳諦聽

諦聽,又稱「善聽」,佛教、中國神話中的神獸,相傳是地藏王菩薩的座騎。常被供奉於佛寺地藏王菩薩的案側。

能力

相傳其能分辨世間一切善惡賢愚。吳承恩《西遊記》:那諦聽若伏在地下,一霎時,便可將四大部洲山川社稷、洞天福地之間,蠃蟲 、鱗蟲、毛蟲、羽蟲、昆蟲,天仙、地仙、神仙、人仙、鬼仙,顧鑒善惡,察聽賢愚。

傳說

新羅國王子金喬覺攜一白犬出家,隱修山林。金被視為地藏化身,而白犬則被視為諦聽神獸的原形。

───

矣。