也許小汽車因為因緣

想那洛水小神龜自由自在

子虛先生說︰昔 時洛水有神龜,總於晨昏之時,彩雲滿天之際,游於洛水之波光霞影之中。興起就神足直行,左旋右轉飛舞迴旋,那時波濤不起漣漪不見,河面只隨神龜尾之上下, 或粗或細或長或短或直或曲契刻成圖文。一日大禹治水偶經洛水恰遇神龜,神龜感念大禹昔日洩洪疏河之恩,特演平日最得意水畫之作,所以歷史才傳說『大禹得洛 書』。

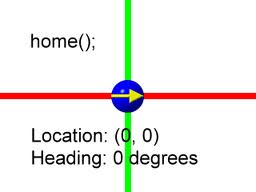

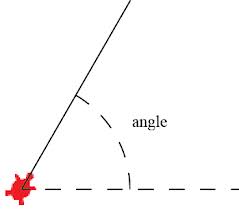

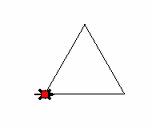

雖然作者以為子虛先生所說乃是烏有之言,然而他卻把『小海龜』繪圖法的精神描寫的活靈活現。小海龜頭的朝向決定它『前進』的方向,『【向前】【數值】 』表示向前走多少單位距離的指令,比方 forward 10,是說向前走十個單位。除了直行之外小海龜還可以『轉向』,這個轉向是依據當前之前進的方向『左轉』或是『右轉』,用『【旋轉】【角度】』表示,比方 rotate 90 是說右轉九十度, 而 rotate -90 是講左轉九十度。小海龜在網頁上的『位置』是由網頁上的 (X,Y) 座標來決定的,它的設定是『左上』(0,0)和『右下』 (600,600) 。小海龜當下的『狀態』state 是由『現在』的『位置』與『朝向角度』所一起決定的,這也就是『存上堆疊』push 與『堆疊取回』pop 存上取回指令裡所說的狀態。小海龜可以給定一個『開始』的『位置』與『朝向』,初進網頁時它在 (50,300),小海龜的頭朝右,它的角度是 0。

─── 摘自《科赫傳說!!》

自主自動,經絡暢通心到行至,早不知何謂『控制』的也。這個『 GoPiGo 』畢竟是人造之物聽人使喚,前進、後退、左旋、右轉不得不有個『運動機制』…

─── 摘自《樹莓派 0W 狂想曲︰ 木牛流馬《控制》》

方才開始注意

薄暮現象

薄暮現象,又稱柏金赫現象(Purkinje effect),是色彩學的內容之一

說明如下: 在白色光源或高明度中,紅色比藍色明度強10倍,在低明度藍色比紅色明度強16倍。指在傍晚時,人的視覺由彩度優先轉換成明暗現象。

視網膜上包括兩種細胞接受外界光源:感色的錐狀細胞,與感光不感色的柱狀細胞。在微弱光線中,人類無法清晰辨識顏色,原因是只剩柱狀細胞可以接受微弱的光源,錐狀細胞感色功能停止。

薄暮現象是從以感色細胞為主的階段轉變為以感光為主的階段過程中的一個狀態:當外界光度逐漸降低,錐狀細胞活躍狀態隨之降低,柱狀細胞開始接手成為視網膜上主要接受光源的細胞,在辨識顏色能力完全消失之前的這個階段。

Simulated appearance of a red geranium and foliage in normal bright-light (photopic) vision, dusk (mesopic) vision, and night (scotopic) vision

Purkinje effect

The Purkinje effect (sometimes called the Purkinje shift or dark adaptation) is the tendency for the peak luminance sensitivity of the human eye to shift toward the blue end of the color spectrum at low illumination levels.[1][2][page needed] The effect is named after the Czech anatomist Jan Evangelista Purkyně.

This effect introduces a difference in color contrast under different levels of illumination. For instance, in bright sunlight, geranium flowers appear bright red against the dull green of their leaves, or adjacent blue flowers, but in the same scene viewed at dusk, the contrast is reversed, with the red petals appearing a dark red or black, and the leaves and blue petals appearing relatively bright.

The sensitivity to light in scotopic vision varies with wavelength, though the perception is essentially black-and-white. The Purkinje shift is the relation between the absorption maximum of rhodopsin, reaching a maximum at about 500 nm, and that of the opsins in the long-wavelength and medium-wavelength cones that dominate in photopic vision, about 555 nm.[3]

In visual astronomy, the Purkinje shift can affect visual estimates of variable stars when using comparison stars of different colors, especially if one of the stars is red.

Physiology

The effect occurs because the color-sensitive cones in the retina are most sensitive to green light, whereas the rods, which are more light-sensitive (and thus more important in low light) but which do not distinguish colors, respond best to green-blue light.[4] This is why humans become virtually color-blind under low levels of illumination, for instance moonlight.

The Purkinje effect occurs at the transition between primary use of the photopic (cone-based) and scotopic (rod-based) systems, that is, in the mesopic state: as intensity dims, the rods take over, and before color disappears completely, it shifts towards the rods’ top sensitivity.[5]

Rod sensitivity improves considerably after 5-10 minutes in the dark,[6] but rods take about 30 minutes of darkness to regenerate photoreceptors and reach full sensitivity.[7]

潛心學習

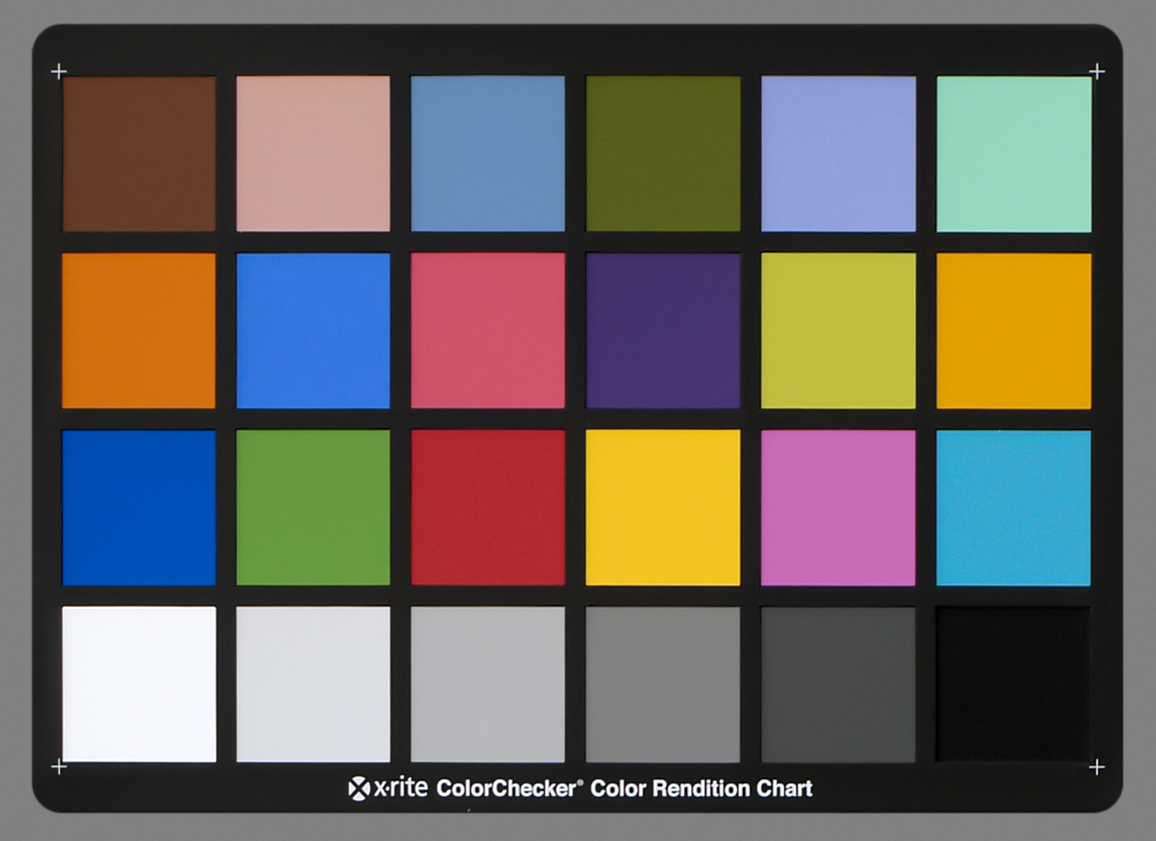

Color balance

In photography and image processing, color balance is the global adjustment of the intensities of the colors (typically red, green, and blue primary colors). An important goal of this adjustment is to render specific colors – particularly neutral colors – correctly. Hence, the general method is sometimes called gray balance, neutral balance, or white balance. Color balance changes the overall mixture of colors in an image and is used for color correction. Generalized versions of color balance are used to correct colors other than neutrals or to deliberately change them for effect.

Image data acquired by sensors – either film or electronic image sensors – must be transformed from the acquired values to new values that are appropriate for color reproduction or display. Several aspects of the acquisition and display process make such color correction essential – including the fact that the acquisition sensors do not match the sensors in the human eye, that the properties of the display medium must be accounted for, and that the ambient viewing conditions of the acquisition differ from the display viewing conditions.

The color balance operations in popular image editing applications usually operate directly on the red, green, and blue channel pixel values,[1][2] without respect to any color sensing or reproduction model. In film photography, color balance is typically achieved by using color correction filters over the lights or on the camera lens.[3]

The left half shows the photo as it came from the digital camera. The right half shows the photo adjusted to make a gray surface neutral in the same light.

Generalized color balance

Sometimes the adjustment to keep neutrals neutral is called white balance, and the phrase color balance refers to the adjustment that in addition makes other colors in a displayed image appear to have the same general appearance as the colors in an original scene.[4] It is particularly important that neutral (gray, neutral, white) colors in a scene appear neutral in the reproduction. [5]

Example of color balancing

Illuminant estimation and adaptation

Most digital cameras have means to select color correction based on the type of scene lighting, using either manual lighting selection, automatic white balance, or custom white balance.[citation needed] The algorithms for these processes perform generalized chromatic adaptation.

Many methods exist for color balancing. Setting a button on a camera is a way for the user to indicate to the processor the nature of the scene lighting. Another option on some cameras is a button which one may press when the camera is pointed at a gray card or other neutral colored object. This captures an image of the ambient light, which enables a digital camera to set the correct color balance for that light.

There is a large literature on how one might estimate the ambient lighting from the camera data and then use this information to transform the image data. A variety of algorithms have been proposed, and the quality of these has been debated. A few examples and examination of the references therein will lead the reader to many others. Examples are Retinex, an artificial neural network[6] or a Bayesian method.[7]

A seascape photograph at Clifton Beach, South Arm, Tasmania, Australia. The white balance has been adjusted towards the warm side for creative effect.

Chromatic colors

Color balancing an image affects not only the neutrals, but other colors as well. An image that is not color balanced is said to have a color cast, as everything in the image appears to have been shifted towards one color.[8][page needed] Color balancing may be thought in terms of removing this color cast.

Color balance is also related to color constancy. Algorithms and techniques used to attain color constancy are frequently used for color balancing, as well. Color constancy is, in turn, related to chromatic adaptation. Conceptually, color balancing consists of two steps: first, determining the illuminant under which an image was captured; and second, scaling the components (e.g., R, G, and B) of the image or otherwise transforming the components so they conform to the viewing illuminant.

Viggiano found that white balancing in the camera’s native RGB color model tended to produce less color inconstancy (i.e., less distortion of the colors) than in monitor RGB for over 4000 hypothetical sets of camera sensitivities.[9] This difference typically amounted to a factor of more than two in favor of camera RGB. This means that it is advantageous to get color balance right at the time an image is captured, rather than edit later on a monitor. If one must color balance later, balancing the raw image data will tend to produce less distortion of chromatic colors than balancing in monitor RGB.

逐步踏入『腦中色彩』之世界︰

Retinex theory

The effect was described in 1971 by Edwin H. Land, who formulated “retinex theory” to explain it. The word “retinex” is a portmanteau formed from “retina” and “cortex“, suggesting that both the eye and the brain are involved in the processing.

The effect can be experimentally demonstrated as follows. A display called a “Mondrian” (after Piet Mondrian whose paintings are similar) consisting of numerous colored patches is shown to a person. The display is illuminated by three white lights, one projected through a red filter, one projected through a green filter, and one projected through a blue filter. The person is asked to adjust the intensity of the lights so that a particular patch in the display appears white. The experimenter then measures the intensities of red, green, and blue light reflected from this white-appearing patch. Then the experimenter asks the person to identify the color of a neighboring patch, which, for example, appears green. Then the experimenter adjusts the lights so that the intensities of red, blue, and green light reflected from the green patch are the same as were originally measured from the white patch. The person shows color constancy in that the green patch continues to appear green, the white patch continues to appear white, and all the remaining patches continue to have their original colors.

Color constancy is a desirable feature of computer vision, and many algorithms have been developed for this purpose. These include several retinex algorithms.[8][9][10] [11] These algorithms receive as input the red/green/blue values of each pixel of the image and attempt to estimate the reflectances of each point. One such algorithm operates as follows: the maximal red value rmax of all pixels is determined, and also the maximal green value gmax and the maximal blue value bmax. Assuming that the scene contains objects which reflect all red light, and (other) objects which reflect all green light and still others which reflect all blue light, one can then deduce that the illuminating light source is described by (rmax, gmax, bmax). For each pixel with values (r, g, b) its reflectance is estimated as (r/rmax, g/gmax, b/bmax). The original retinex algorithm proposed by Land and McCann uses a localized version of this principle.[12][13]

Although retinex models are still widely used in computer vision, actual human color perception has been shown to be more complex.[14]