欲說 mipi alliance 之

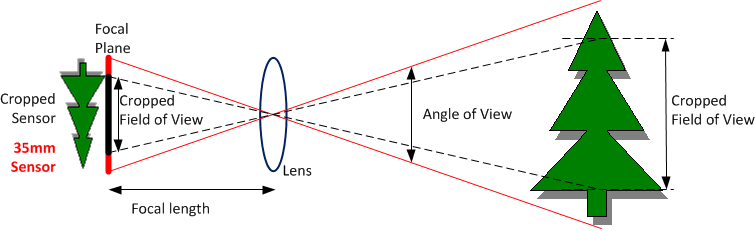

MIPI CSI-2 and MIPI CSI-3 are the successors of the original MIPI camera interface standard, and both standards continue to evolve. Both are highly capable architectures that give designers, manufacturers – and ultimately consumers – more options and greater value while maintaining the advantages of standard interfaces.

Evolving CSI-2 Specification

The bandwidths of today’s host processor-to-camera sensor interfaces are being pushed to their limits by the demand for higher image resolution, greater color depth and faster frame rates. But more bandwidth is simply not enough for designers with performance targets that span multiple product generations.

The mobile industry needs a standard, robust, scalable, low-power, high-speed, cost-effective camera interface that supports a wide range of imaging solutions for mobile devices.

The MIPI® Alliance Camera Working Group has created a clear design path that is sufficiently flexible to resolve not just today’s bandwidth challenge but “features and functionality” challenges of an industry that manufactures more than a billion handsets each year for a wide spectrum of users, applications and cost points.

Additional details are available in the MIPI Camera CSI-2 Specification Brief.

不得不先提及

智慧財產權,又稱智慧財產權、智財權,是指智力創造成果:發明 、文學和藝術作品,以及商業中使用的符號、名稱、圖像和外觀設計。[1]智慧財產權可以分為工業產權與版權兩類,工業產權包括發明(專利)、商標、工業品外觀設計和地理標誌,版權則包括文學和藝術作品。[1]

智慧財產權被概括為一切來自知識活動領域的權利,始於17世紀中葉法國學者卡普佐夫的著作,後由比利時法學家皮爾第所發展;到1967年《成立世界智慧財產權組織公約》簽訂後,智慧財產權的概念得到世界上大多數國家所認可。[2]

歷史

智慧財產起源已不可考,一種說法是起源於歐洲威尼斯,中世紀的威尼斯商業允許買賣技術與紡織的圖樣,可以登記買賣,並且於取得專利權之後獨家販售。

其後推廣藝術作品也可以買賣,視為代表作者人格權之一的權利。藝術品因為作者而有不同的價值,例如法國收藏藝術作品時特別強調藝術家身份,以強調藝術品的價值。

十三世紀,那就是兩浙轉運司於嘉熙二年(1238)為祝穆《方輿勝覽》所發布的《榜文》,以及淳佑八年(1248)行在國子監發給段昌武開雕《叢桂毛詩集解》的《執照》。

,也簡稱『 IP 』

Intellectual property (IP) refers to creations of the intellect for which a monopoly is assigned to designated owners by law.[1] Intellectual property rights (IPRs) are the protections granted to the creators of IP, and include trademarks, copyright, patents, industrial design rights, and in some jurisdictions trade secrets.[2] Artistic works including music and literature, as well as discoveries, inventions, words, phrases, symbols, and designs can all be protected as intellectual property.

While intellectual property law has evolved over centuries, it was not until the 19th century that the term intellectual property began to be used, and not until the late 20th century that it became commonplace in the majority of the world.[3]

History

The Statute of Monopolies (1624) and the British Statute of Anne (1710) are seen as the origins of patent law and copyright respectively,[4] firmly establishing the concept of intellectual property.

The first known use of the term intellectual property dates to 1769, when a piece published in the Monthly Review used the phrase.[5] The first clear example of modern usage goes back as early as 1808, when it was used as a heading title in a collection of essays.[6]

The German equivalent was used with the founding of the North German Confederation whose constitution granted legislative power over the protection of intellectual property (Schutz des geistigen Eigentums) to the confederation.[7] When the administrative secretariats established by the Paris Convention (1883) and the Berne Convention (1886) merged in 1893, they located in Berne, and also adopted the term intellectual property in their new combined title, the United International Bureaux for the Protection of Intellectual Property.

The organization subsequently relocated to Geneva in 1960, and was succeeded in 1967 with the establishment of the World Intellectual Property Organization (WIPO) by treaty as an agency of the United Nations. According to Lemley, it was only at this point that the term really began to be used in the United States (which had not been a party to the Berne Convention),[3] and it did not enter popular usage until passage of the Bayh-Dole Act in 1980.[8]

“The history of patents does not begin with inventions, but rather with royal grants by Queen Elizabeth I (1558–1603) for monopoly privileges… Approximately 200 years after the end of Elizabeth’s reign, however, a patent represents a legal right obtained by an inventor providing for exclusive control over the production and sale of his mechanical or scientific invention… [demonstrating] the evolution of patents from royal prerogative to common-law doctrine.”[9]

The term can be found used in an October 1845 Massachusetts Circuit Court ruling in the patent case Davoll et al. v. Brown., in which Justice Charles L. Woodbury wrote that “only in this way can we protect intellectual property, the labors of the mind, productions and interests are as much a man’s own…as the wheat he cultivates, or the flocks he rears.”[10] The statement that “discoveries are…property” goes back earlier. Section 1 of the French law of 1791 stated, “All new discoveries are the property of the author; to assure the inventor the property and temporary enjoyment of his discovery, there shall be delivered to him a patent for five, ten or fifteen years.”[11] In Europe, French author A. Nion mentioned propriété intellectuelle in his Droits civils des auteurs, artistes et inventeurs, published in 1846.

Until recently, the purpose of intellectual property law was to give as little protection as possible in order to encourage innovation. Historically, therefore, they were granted only when they were necessary to encourage invention, limited in time and scope.[12]

The concept’s origins can potentially be traced back further. Jewish law includes several considerations whose effects are similar to those of modern intellectual property laws, though the notion of intellectual creations as property does not seem to exist – notably the principle of Hasagat Ge’vul (unfair encroachment) was used to justify limited-term publisher (but not author) copyright in the 16th century.[13] In 500 BCE, the government of the Greek state of Sybaris offered one year’s patent “to all who should discover any new refinement in luxury”.[14]

實在不好說也,唯能指月而已矣!!!

by 6by9 » Fri May 01, 2015 9:33 pm

So various people have asked about supporting this or that random camera, or HDMI input. Those at Pi Towers have been investigating various options, but none of those have come to fruition yet and raised several IP issues (something I really don’t want to get involved in!), or are impractical due to the effort involved in tuning the ISP for a new sensor.

I had a realisation that we could add a new MMAL (or IL if you really have to) component that just reads the data off the CSI2 bus and dumps it in the provided buffers. After a moderate amount of playing, I’ve got this working

Firstly, this should currently be considered alpha code – it’s working, but there are quite a few things that are only partially implemented and/or not tested. If people have a chance to play with it and don’t find too many major holes in it, then I’ll get Dom to release it officially, but still a beta.

Secondly, this is ONLY providing access to the raw data. Opening up the Image Sensor Pipeline (ISP) is NOT an option. There are no real processing options for Bayer data within the GPU, so that may limit the sensors that are that useful with this.

Thirdly, all data that the Foundation has from Omnivision for the OV5647 is under NDA, therefore I can not discuss the details there.

So what have we got?

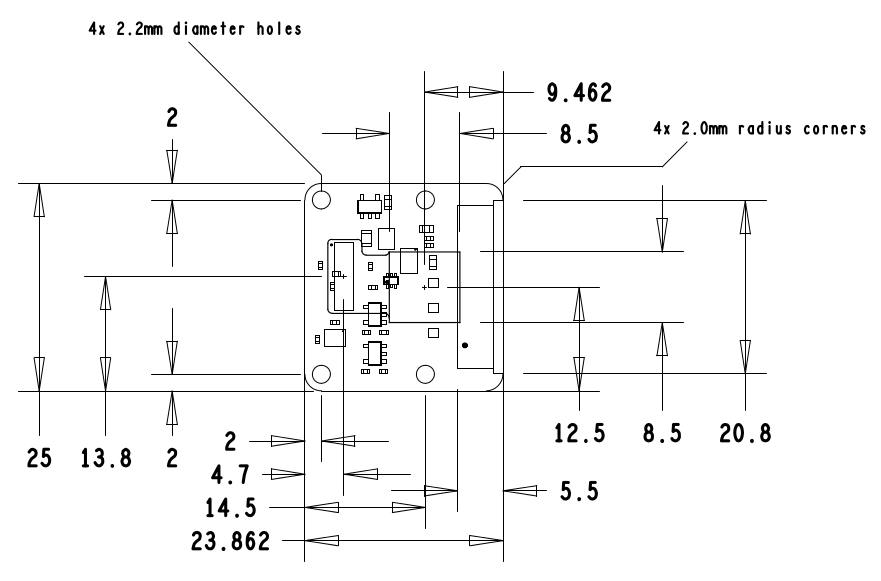

There’s a test firmware on my github account (https://github.com/6by9/RPiTest/blob/master/rawcam/start_x.elf) that adds a new MMAL component (“vc.ril.rawcam”). It has one output port which will spit out the data received from the CSI-2 peripheral (known as Unicam). Please do a “sudo rpi-update” first as it is built from the same top of tree with my changes. DO NOT RISK A CRITICAL PI SYSTEM WITH THIS FIRMWARE. The required firmware changes are now in the official release – no need for special firmware.- There’s a modified userland (https://github.com/6by9/userland/tree/rawcam) that includes the new header changes, and a new, very simple, app called raspiraw. It saves every 15th frame as rawXXXX.raw and runs for 30 seconds. The saved data is the same format as the raw on the end of the JPEG that you get from “raspistill -raw”, though you need to hack dcraw to get it to recognise the data. The code demonstrates the basic use of the component and includes code to start/stop the OV5647 streaming in the full 5MPix mode. It does not include in the source the GPIO manipulations required to be able to address the sensor, but there is a script “camera_i2c” that uses wiringPi to do that (I started doing it within the app, but that then required running it as root, and I didn’t like that). You do need to jump through the hoops to enable /dev/i2c-0 first (see the “Interfacing” forum, but it should just be adding “dtparam=i2c_vc=on” to /boot/config.txt, and “sudo modprobe i2c-dev”).

The OV5647 register settings in that app are those captured and posted on https://www.raspberrypi.org/forums/view … 25#p748855

- I’ve made use of zero copy within MMAL. So the buffers are allocated from GPU memory, but mapped into ARM virtual address space. It should save a fair chunk of just copying stuff around, which could be quite a burden when doing 5MPix15 or similar. This requires a quick tweak to /lib/udev/rules.d/10-local-rpi.rules adding the line: SUBSYSTEM==”vc-sm”, GROUP=”video”, MODE=”0660″.

Hmm, that means that we’ve just achieved the request in https://www.raspberrypi.org/forums/view … 3&t=108287, and things like the HDMI to CSI-2 receiver chips can now spit their data out into userland (although image packing formats may not be optimal, and the audio channel won’t currently come through)

What is this not doing?

- This is just reading the raw data out of the sensor. There is no AGC loop running, therefore you’ve got one fixed exposure time and analogue gain. Not going to be fixed as that is down to the individual sensor/image source.

- The handling of the sensor non-image data path is not tested. You will find that you always get a pair of buffers back with the same timestamp. One has the MMAL_BUFFER_HEADER_FLAG_CODECSIDEINFO flag set and should be the non-image data. I have not tested this at all as yet, and the length will always come through as the full buffer size at the moment.

- The hardware peripheral has quite a few nifty tricks up its sleeve, such as decompressing DPCM data, or repacking data to an alternate bit depth. This has not been tested, but the relevant enums are there.

- There are a bundle of timing registers and other setup values that the hardware takes. I haven’t checked exactly what can and can’t be divulged of the Broadcom hardware, so currently they are listed as parameters timing1-timing5, term1/term2, and cpi_timing1/cpi_timing-2. I need to discuss with others whether these can be renamed to something more useful.

- This hasn’t been heavily tested. There is a bundle of extra logging on the VC side, so “sudo vcdbg log msg” should give a load of information if people hit problems.

So it’s a bank holiday weekend, those who are interested please have a play and report back. I will offer assistance where I can, but obviously I can’t really help if you’ve hooked up an ABC123 sensor and it doesn’t work, as I won’t have one of those.

Longer term I do hope to find time to integrate this into the V4L2 soc-camera framework so that people can hopefully use a wider variety of sensors, but that is a longer term aim. The code for talking to MMAL from the kernel is already there in the bcm2835-v4l2 driver, and the demo code for the new component is linked here, so it doesn’t have to be me who does that.

I think that just about covers it all for now. Please do report back if you play with this – hopefully it’ll be useful to a fair few people, so I do want to improve it where needed.

Thanks to jbeale for following my daft requests and hooking an I2C analyser to the camera I2C, as that means I’m not breaking NDAs.

Further reading:

– The official CSI-2 spec is only available to MIPI Alliance members, but there is a copy of a spec on http://electronix.ru/forum/index.php?ac … t&id=67362 which should give the gist of how it works. If you really start playing, then you’ll have to understand how the image ID scheme works, image data packing, and the like.

– OV5647 docs – please don’t ask us for them. There is a copy floating around on the net which Google will find you, and there are also discussions on the Freescale i.MX6 forums about writing a V4L2 driver for that platform, so information may be gleaned from there (https://community.freescale.com/thread/310786 and similar).

*edit*:

NB 1: As noted further down the thread, my scripts set up the GPIOs correctly for B+, and B+2 Pis (probably A+ too). If you are using an old A or B, please read lower to note the alternate GPIOs and I2C bus usage.

NB 2: This will NOT work with the new Pi display. The display also uses I2C-0 driven from the GPU, so adding in an ARM client of I2C-0 will cause issues. It may be possible to get the display to be recognised but not enable the touchscreen driver, but I haven’t investigated the options there.

![]()