提筆…想著……如何介紹

Gym is a toolkit for developing and comparing reinforcement learning algorithms. It supports teaching agents everything from walking to playing games like Pong or Pinball.

呢?誰知腦海卻先浮現

巴夫洛夫

伊凡·彼得羅維奇·巴夫洛夫[1](俄語:Иван Петрович Павлов,1849年9月26日-1936年2月27日),俄羅斯生理學家、心理學家、醫師。因為對狗研究而首先對古典制約作出描述而著名,並在1904年因為對消化系統的研究得到諾貝爾生理學或醫學獎。

那隻有名的狗!

古典制約

古典制約(巴夫洛夫制約、反應制約、alpha制約),是一種關聯性學習。伊凡·彼得羅維奇·巴夫洛夫將這種產生制約行為的學習型態描述為「動物對特定制約刺激的反應」。最簡單的形式,是亞里斯多德曾經提出的接近律,也就是當兩件事物經常同時出現時,大腦對其中一件事物的記憶會附帶另外一件事物。

古典制約理論一開始的重點放在反射行為或是非自願行為。任何一個反射都是中性刺激與產生的反應兩者的關係。近幾年來對古典制約理論所做的反射限制被拋棄,且自願行為的制約刺激也成為重要研究[1]。

伊萬·巴甫洛夫的狗

古典制約最著名的例子,是巴甫洛夫的狗的唾液制約反射。狗能夠對食物自然而然的分泌唾液,此時巴甫洛夫將食物看作非制約刺激(US)、唾液分泌看作非制約反應(UR),並將兩者的關係稱為非制約反射。而如果在提供食物之前的幾秒鐘發出一些作為中性刺激(NS)的聲響,將會使得這個聲響轉變為制約刺激(CS),能夠單獨在沒有食物的狀況下引起作為制約反應(CR)的唾液分泌,兩者的關係則被稱做制約反射。

這種與食物相關的刺激與所引起的反應的關係便是所謂古典制約。食物引起唾液分泌是先天性的,而聲響之所以能夠引起唾液分泌,源自動物個體的所經歷的經驗。

本實驗簡化如下:

食物 (US) =>唾液分泌(UR)

食物 (US) + 聲音 (NS) =>唾液分泌(UR)

聲音 (CS) =>唾液分泌(CR)

一隻巴夫洛夫的狗,巴夫洛夫博物館,2005年

不由得憶起…曾經懷疑……行為主義的理論是真的嘛!

今日在人工智慧光照下,反思強化學習︰

Reinforcement learning

Reinforcement learning (RL) is an area of machine learning concerned with how software agents ought to take actions in an environment so as to maximize some notion of cumulative reward. The problem, due to its generality, is studied in many other disciplines, such as game theory, control theory, operations research, information theory, simulation-based optimization, multi-agent systems, swarm intelligence, statistics and genetic algorithms. In the operations research and control literature, reinforcement learning is called approximate dynamic programming, or neuro-dynamic programming.[1][2] The problems of interest in reinforcement learning have also been studied in the theory of optimal control, which is concerned mostly with the existence and characterization of optimal solutions, and algorithms for their exact computation, and less with learning or approximation, particularly in the absence of a mathematical model of the environment. In economics and game theory, reinforcement learning may be used to explain how equilibrium may arise under bounded rationality. In machine learning, the environment is typically formulated as a Markov Decision Process (MDP), as many reinforcement learning algorithms for this context utilize dynamic programming techniques.[2][1][3] The main difference between the classical dynamic programming methods and reinforcement learning algorithms is that the latter do not assume knowledge of an exact mathematical model of the MDP and they target large MDPs where exact methods become infeasible.[2][1]

Reinforcement learning is considered as one of three machine learning paradigms, alongside supervised learning and unsupervised learning. It differs from supervised learning in that correct input/output pairs[clarification needed] need not be presented, and sub-optimal actions need not be explicitly corrected. Instead the focus is on performance[clarification needed], which involves finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge).[4] The exploration vs. exploitation trade-off has been most thoroughly studied through the multi-armed bandit problem and in finite MDPs.[citation needed]

Introduction

The typical framing of a Reinforcement Learning (RL) scenario: an agent takes actions in an environment, which is interpreted into a reward and a representation of the state, which are fed back into the agent.

Basic reinforcement is modeled as a Markov decision process:

- a set of environment and agent states, S;

- a set of actions, A, of the agent;

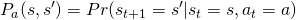

is the probability of transition from state

is the probability of transition from state  to state

to state  under action

under action  .

. is the immediate reward after transition from

is the immediate reward after transition from  to

to  with action

with action  .

.- rules that describe what the agent observes

Rules are often stochastic. The observation typically involves the scalar, immediate reward associated with the last transition. In many works, the agent is assumed to observe the current environmental state (full observability). If not, the agent has partial observability. Sometimes the set of actions available to the agent is restricted (a zero balance cannot be reduced).

A reinforcement learning agent interacts with its environment in discrete time steps. At each time t, the agent receives an observation ![]() , which typically includes the reward

, which typically includes the reward ![]() . It then chooses an action

. It then chooses an action ![]() from the set of available actions, which is subsequently sent to the environment. The environment moves to a new state

from the set of available actions, which is subsequently sent to the environment. The environment moves to a new state ![]() and the reward

and the reward ![]() associated with the transition

associated with the transition ![]() is determined. The goal of a reinforcement learning agent is to collect as much reward as possible. The agent can (possibly randomly) choose any action as a function of the history.

is determined. The goal of a reinforcement learning agent is to collect as much reward as possible. The agent can (possibly randomly) choose any action as a function of the history.

When the agent’s performance is compared to that of an agent that acts optimally, the difference in performance gives rise to the notion of regret. In order to act near optimally, the agent must reason about the long term consequences of its actions (i.e., maximize future income), although the immediate reward associated with this might be negative.

Thus, reinforcement learning is particularly well-suited to problems that include a long-term versus short-term reward trade-off. It has been applied successfully to various problems, including robot control, elevator scheduling, telecommunications, backgammon, checkers[5] and go (AlphaGo).

Two elements make reinforcement learning powerful: the use of samples to optimize performance and the use of function approximation to deal with large environments. Thanks to these two key components, reinforcement learning can be used in large environments in the following situations:

- A model of the environment is known, but an analytic solution is not available;

- Only a simulation model of the environment is given (the subject of simulation-based optimization);[6]

- The only way to collect information about the environment is to interact with it.

The first two of these problems could be considered planning problems (since some form of model is available), while the last one could be considered to be a genuine learning problem. However, reinforcement learning converts both planning problems to machine learning problems.

Exploration

Reinforcement learning requires clever exploration mechanisms. Randomly selecting actions, without reference to an estimated probability distribution, shows poor performance. The case of (small) finite Markov decision processes is relatively well understood. However, due to the lack of algorithms that properly scale well with the number of states (or scale to problems with infinite state spaces), simple exploration methods are the most practical.

One such method is ![]() -greedy, when the agent chooses the action that it believes has the best long-term effect with probability

-greedy, when the agent chooses the action that it believes has the best long-term effect with probability ![]() . If no action which satisfies this condition is found, the agent chooses an action uniformly at random. Here,

. If no action which satisfies this condition is found, the agent chooses an action uniformly at random. Here, ![]() is a tuning parameter, which is sometimes changed, either according to a fixed schedule (making the agent explore progressively less), or adaptively based on heuristics.[7]

is a tuning parameter, which is sometimes changed, either according to a fixed schedule (making the agent explore progressively less), or adaptively based on heuristics.[7]

觀點彷彿…不覺間……竟游移未定哩!!

無論『簡單符碼』能否解釋『森然宇宙』?前行者最好能讀讀 OpenAI Gym 之『白皮書』︰

A whitepaper for OpenAI Gym is available at http://arxiv.org/abs/1606.01540, and here’s a BibTeX entry that you can use to cite it in a publication:

@misc{1606.01540,

Author = {Greg Brockman and Vicki Cheung and Ludwig Pettersson and Jonas Schneider and John Schulman and Jie Tang and Wojciech Zaremba},

Title = {OpenAI Gym},

Year = {2016},

Eprint = {arXiv:1606.01540},

}

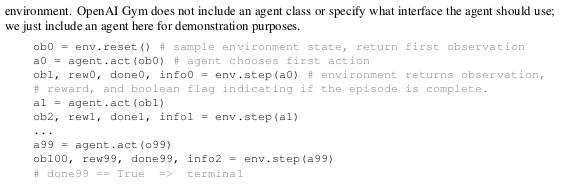

OpenAI Gym

OpenAI Gym is a toolkit for reinforcement learning research. It includes a growing collection of benchmark problems that expose a common interface, and a website where people can share their results and compare the performance of algorithms. This whitepaper discusses the components of OpenAI Gym and the design decisions that went into the software.

Submission history

From: John Schulman [view email]

[v1] Sun, 5 Jun 2016 17:54:48 UTC (546 KB)

且知道他的目的︰

Open source interface to reinforcement learning tasks.

The gym library provides an easy-to-use suite of reinforcement learning tasks.

import gym

env = gym.make("Taxi-v2")

observation = env.reset()

for _ in range(1000):

env.render()

action = env.action_space.sample() # your agent here (this takes random actions)

observation, reward, done, info = env.step(action)

We provide the environment; you provide the algorithm.

You can write your agent using your existing numerical computation library, such as TensorFlow or Theano.

………

再踏上決疑之路的好◎

sudo pip3 install gym