俗諺說︰『天要下雨 ,娘要嫁人』何謂也?這事徐尚禮先生曾經在中時電子報上解釋過。假使『百度』一下,也許那『![]() 』娘當真是『純潔美好』之『姑娘』也!若考之以漢字淵源︰

』娘當真是『純潔美好』之『姑娘』也!若考之以漢字淵源︰

《說文解字》

孃:煩擾也。一曰肥大也。从女襄聲。女良切

《說文解字注》

(孃) 煩![]() 也。煩、熱頭痛也。

也。煩、熱頭痛也。![]() 、煩也。今人用擾攘字、古用孃。賈誼傳作搶攘。莊子在宥作傖囊。楚詞作恇攘。俗作劻勷。皆用叚借字耳。今攘行而孃廢矣。又按廣韵孃女良切、母稱。娘亦女良切 、少女之号。唐人此二字分用畫然。故耶孃字斷無有作娘者。今人乃罕知之矣。一曰肥大也。方言。

、煩也。今人用擾攘字、古用孃。賈誼傳作搶攘。莊子在宥作傖囊。楚詞作恇攘。俗作劻勷。皆用叚借字耳。今攘行而孃廢矣。又按廣韵孃女良切、母稱。娘亦女良切 、少女之号。唐人此二字分用畫然。故耶孃字斷無有作娘者。今人乃罕知之矣。一曰肥大也。方言。![]() 、盛也。秦晉或曰

、盛也。秦晉或曰![]() 。凡人言盛及其所愛偉其肥晠謂之

。凡人言盛及其所愛偉其肥晠謂之![]() 。郭注云。肥多肉。按肉部旣有字矣。此與彼音義皆同也。漢書。壤子王梁、代。壤卽

。郭注云。肥多肉。按肉部旣有字矣。此與彼音義皆同也。漢書。壤子王梁、代。壤卽![]() 孃字。从女。襄聲。女良切。十部。按前後二義皆當音壤。

孃字。从女。襄聲。女良切。十部。按前後二義皆當音壤。

誠然『娘』有『少女心』耶??如此『天要下雨』必然發生,正對少女懷春『娘要嫁人』無法阻攔乎!!故知於一定之『時空』條件下,『娘要嫁人』之『緣』或等於『天要下雨 』之『因』,那麼這兩者的『機遇』能不相同的嗎??!!如是亦可知『蘇格拉底』之『不得不死』矣!!??

蘇格拉底之死

蘇格拉底之死(法語:La Mort de Socrate)是法國畫家及新古典主義畫派的奠基人雅克-路易·大衛於1787年創作的一幅油畫。和大衛同一時期的其他畫作一樣,《蘇格拉底之死》也採用了古典的主題:柏拉圖在《斐多篇》中所記錄的蘇格拉底之死。畫中鎮定自若 、一如既往討論哲學的蘇格拉底使人崇敬,而他周圍哀慟不已的朋友們增添了畫面的悲劇性,使畫面獲得了凝重、剛毅、冷峻的藝術效果[1]。

背景與創作

公元前399年,七十高齡的蘇格拉底被控不敬神和腐蝕雅典的年輕人,被判處服毒而死。按照《斐多篇》的記載,面對死亡,蘇格拉底非常平靜,一如既往地和弟子克里同、斐多、底比斯來的西米亞斯和克貝等人進行哲學討論,只不過主題成了死亡是什麼和死亡之後如何,蘇格拉底認為靈魂不朽,將死亡看作一個另外的王國,一個和塵世不同的地方,而非存在的終結[2]。

1758年狄德羅發表了自己的論《戲劇詩》,認為蘇格拉底之死的場景適合作為啞劇的主題,之後描繪這一主題的畫作多次出現[3]。1775-1780年,大衛第一次去羅馬旅行,他研究了對葬禮儀式的描繪,畫下了很多草稿。大衛的很多主要作品都來源於這些素描[4]。大衛在1782年已經創作了這幅畫的草稿,在1786年接受了特胡丹·德蒙蒂尼次子的委託,開始創作這幅畫[5][6]1787年完成後在沙龍展覽,受到不少藝術家的歡迎,當時正在巴黎的托馬斯·傑斐遜也認為這幅畫充分體現了新古典主義的壯麗理想,在給美國歷史畫家約翰·杜倫波的信中提到「展覽中最好的作品是大衛的《蘇格拉底之死》,一幅極好的作品」[7]。

| 藝術家 | 雅克-路易·大衛 |

|---|---|

| 年代 | 1787年完成 |

| 類型 | 油畫 |

| 大小 | 129.5 厘米 cm × 196.2 厘米 cm(?? × ??) |

| 位置 | 紐約大都會藝術博物館 |

所以能明白 Michael Nielsen 先生為何會突起『費米』之『大象說』的了︰

Overfitting and regularization

The Nobel prizewinning physicist Enrico Fermi was once asked his opinion of a mathematical model some colleagues had proposed as the solution to an important unsolved physics problem. The model gave excellent agreement with experiment, but Fermi was skeptical. He asked how many free parameters could be set in the model. “Four” was the answer. Fermi replied*

*The quote comes from a charming article by Freeman Dyson, who is one of the people who proposed the flawed model. A four-parameter elephant may be found here.

: “I remember my friend Johnny von Neumann used to say, with four parameters I can fit an elephant, and with five I can make him wiggle his trunk.”.

The point, of course, is that models with a large number of free parameters can describe an amazingly wide range of phenomena. Even if such a model agrees well with the available data, that doesn’t make it a good model. It may just mean there’s enough freedom in the model that it can describe almost any data set of the given size, without capturing any genuine insights into the underlying phenomenon. When that happens the model will work well for the existing data, but will fail to generalize to new situations. The true test of a model is its ability to make predictions in situations it hasn’t been exposed to before.

Fermi and von Neumann were suspicious of models with four parameters. Our 30 hidden neuron network for classifying MNIST digits has nearly 24,000 parameters! That’s a lot of parameters. Our 100 hidden neuron network has nearly 80,000 parameters, and state-of-the-art deep neural nets sometimes contain millions or even billions of parameters. Should we trust the results?

───

還特意給了個鍊結

How to fit an elephant

John von Neumann famously said

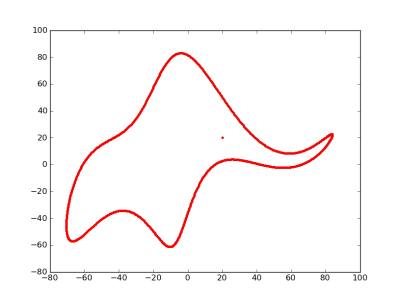

With four parameters I can fit an elephant, and with five I can make him wiggle his trunk.

By this he meant that one should not be impressed when a complex model fits a data set well. With enough parameters, you can fit any data set.

It turns out you can literally fit an elephant with four parameters if you allow the parameters to be complex numbers.

I mentioned von Neumann’s quote on StatFact last week and Piotr Zolnierczuk replied with reference to a paper explaining how to fit an elephant:

“Drawing an elephant with four complex parameters” by Jurgen Mayer, Khaled Khairy, and Jonathon Howard, Am. J. Phys. 78, 648 (2010), DOI:10.1119/1.3254017.

Piotr also sent me the following Python code he’d written to implement the method in the paper. This code produced the image above.

───

大概不會是潛意識裡希望『拯救大象』的吧??又怎知卻挑起人對『大象林旺』之幽思呢!!