水滸傳 元(明) ‧ 施耐庵輯

第十六回 楊志押送金銀擔 吳用智取生辰綱

話說當時公孫勝正在閣兒裏對晁蓋說這北京「生辰綱」是不義之財 ,取之何礙。只見一個人從外面搶將入來,揪住公孫勝道:「你好大膽!卻才商議的事,我都知了也。」那人卻是「智多星」吳學究 。晁蓋笑道:「教授休慌,且請相見。」兩個敘禮罷。吳用道:「江湖上久聞人說『入雲龍』公孫勝一清大名,不期今日此處得會 !」晁蓋道:「這位秀才先生,便是『智多星』吳學究。」公孫勝道:「吾聞江湖上多人曾說加亮先生大名,豈知緣法卻在保正莊上得會。只是保正疏財仗義,以 此天下豪傑,都投門下。」晁蓋道:「再有幾個相識在裏面,一發請進後堂深處相見。」三個人入到裏面,就與劉唐、三阮都相見了。正是:

金帛多藏禍有基,英雄聚會本無期。一時豪俠欺黃屋,七宿光芒動紫薇。

………

生成函數有何『用』?無『用』處『用』是『大用』??『用大』之道可尋味!

老子第四十二章中講︰道生一,一生二,二生三,三生萬物。是說天地生萬物就像四季循環的自然而然,如果『人』或將成為『亦大 』,就得知道大自然 『益』生之道,能循本溯源貴能『得一』 。他固善於『觀水』,盛讚『上善若水』,卻也深知水為山堵之『蹇』、人為慾阻之『蒙』難,故於第三十九章中又講︰

昔之得一者,天得一以清,地得一以寧,神得一以靈,谷得一以盈,萬物得一以生,侯王得一以為天下貞。其致之。天無以清則恐裂,地無以寧則恐發,神無以靈則恐歇,谷無以盈則恐竭,萬物無以生則恐滅,侯王無以貞高則恐蹶。故貴以賤為本,高以下為基。是以侯王自謂孤寡不穀,此非以賤為本耶?非乎?人之所惡 ,唯孤寡不穀,而侯王以為稱。故致譽無譽。不欲琭琭如玉,珞珞如石。

,希望人們知道所謂『道德』之名,實在說的是『得到』── 得道── 的啊!!如果乾坤都『沒路』可走,人又該往向『何方』??

昔時吳越之爭,越王勾踐『臥薪嘗膽』逐夢復國,此事紀載於 《史記‧越王勾踐世家》,在此我們將談及一人亦載之於史記︰

范蠡‧史記‧貨殖列傳

昔者越王句踐困於會稽之上,乃用范蠡、計然。計然曰:『知斗則修備,時用則知物,二者形則萬貨之情可得而觀已。故歲在金,穰;水,毀;木,饑;火,旱。旱則資舟,水則資車,物之理也 。六歲穰,六歲旱,十二歲一大饑。夫糶,二十病農,九十病末。末病則財不出,農病則草不辟矣。上不過八十,下不減三十 ,則農末 俱利,平糶齊物,關市不乏,治國之道也。積著之理,務完物,無息幣。以物相貿易,腐敗而食之貨勿留,無敢居貴。 論其有餘不足,則知貴賤。貴上極則反賤,賤下極則反貴。貴出如糞土,賤取如珠玉。財幣欲其行如流水。』修之十年,國富,厚賂戰士,士赴矢石,如渴得飲,遂報彊吳,觀兵中國,稱號『五霸 』。

范蠡既雪會稽之恥,乃喟然而嘆曰:「計然之策七,越用其五而得意。既已施於國,吾欲用之家。」乃乘扁舟浮於江湖,變名易姓 ,適齊為鴟夷子皮,之陶為朱公。 朱公以為陶天下之中,諸侯四通,貨物所交易也。乃治產積居。與時逐而不責於人。故善治生者,能擇人而任時。十九年之中三致千金,再分散與貧交疏昆弟 。此所謂富好行其德者也。後年衰老而聽子孫,子孫修業而息之 ,遂至巨萬。故言富者皆稱陶朱公。

文子治國富民之策有七,越王只用其五就洋洋得意。范蠡細省已用『天時』、『地利』二者,所餘不用,不就是『人和』不用了嗎?勾踐得國後必將失人,此時不乘扁舟浮於江湖,怕我連命都不保了。陶朱公之為後世所稱的『財神』,不因他能得之於天下成大富巨萬,而因他能『三聚三散』用之於天下。此時如果再讀讀莊子的二則寓言︰

莊子‧胠篋之竊鉤者誅,竊國者為諸侯;

聖人不死,大盜不止。雖重聖人而治天下,則是重利盜跖也。為之斗斛以量之,則並與斗斛而竊之;為之權衡以稱之,則並與權衡而竊之;為之符璽以信之,則並與符璽而竊之;為之仁義以矯之,則並與仁義而竊之。何以知其然邪?彼竊鉤者誅,竊國者為諸侯 ,諸侯之門而仁義存焉,則是非竊仁義聖知邪?故逐於大盜,揭諸侯,竊仁義並斗斛權衡符璽之利者,雖有軒冕之賞弗能勸,斧鉞之威弗能禁。此重利盜跖而使不禁者,是乃聖人之過也。

莊子‧秋水之無用用大;

惠子謂莊子曰:魏王貽我大瓠之種,我樹之成而實五石。以盛水漿,其堅不能自舉也;剖之以為瓢,則瓠落無所容。非不呺然大也,吾為其無用而掊之。

莊子曰:夫子固拙于用大矣!宋人有善為不龜手之藥者,世世以洴澼絖為事。客聞之,請買其方百金。聚族而謀曰:『我世世為洴澼絖,不過數金,今一朝而鬻技百金,請與之。』客得之,以說吳王。越有難,吳王使之將。冬,與越人水 戰,大敗越人,裂地而封之。能不龜手一也;或以封,或不免於洴澼絖,則所用之異也。今子有五石之瓠,何不慮以為大樽,而浮於江湖,而憂其瓠落無所容?則夫子猶有蓬之心也夫!

─── 摘自《跟隨□?築夢!!》

氣味相投可『言小』!!『用小』之術胡可說?氣息就在呼吸間,履霜堅冰循自然,水滴石穿豈無期!!好高騖遠乏基石,怎得花開月圓時??大小內外皆天地☆

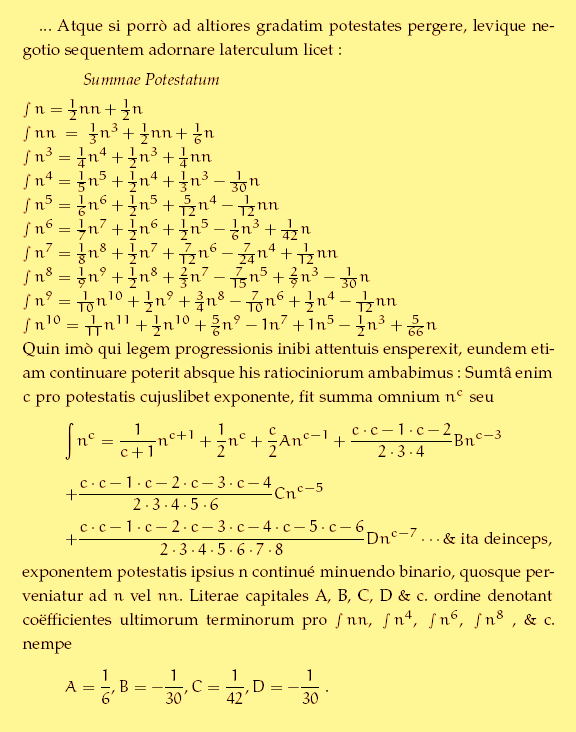

既得白努利數之生成函數 ![]() 且先探其奇偶性乎?

且先探其奇偶性乎?

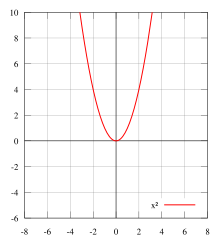

Even and odd functions

In mathematics, even functions and odd functions are functions which satisfy particular symmetry relations, with respect to taking additive inverses. They are important in many areas of mathematical analysis, especially the theory of power series and Fourier series. They are named for the parity of the powers of the power functions which satisfy each condition: the function f(x) = xn is an even function if n is an even integer, and it is an odd function if n is an odd integer.

Definition and examples

Definition and examples

The concept of evenness or oddness is defined for functions whose domain and image both have an additive inverse. This includes additive groups, all rings, all fields, and all vector spaces. Thus, for example, a real-valued function of a real variable could be even or odd, as could a complex-valued function of a vector variable, and so on.

The examples are real-valued functions of a real variable, to illustrate the symmetry of their graphs.

Even functions

Let f(x) be a real-valued function of a real variable. Then f is even if the following equation holds for all x and -x in the domain of f:[1]

or

Geometrically speaking, the graph face of an even function is symmetric with respect to the y-axis, meaning that its graph remains unchanged after reflection about the y-axis.

Examples of even functions are |x|, x2, x4, cos(x), cosh(x), or any linear combination of these.

Odd functions

Again, let f(x) be a real-valued function of a real variable. Then f is odd if the following equation holds for all x and -x in the domain of f:[2]

or

Geometrically, the graph of an odd function has rotational symmetry with respect to the origin, meaning that its graph remains unchanged after rotation of 180 degrees about the origin.

Examples of odd functions are x, x3, sin(x), sinh(x), erf(x), or any linear combination of these.

![]() 雖然非奇非偶,此形式

雖然非奇非偶,此形式 ![]() 數理直通光明道︰

數理直通光明道︰

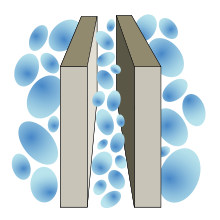

Bose–Einstein statistics

In quantum statistics, Bose–Einstein statistics (or more colloquially B–E statistics) is one of two possible ways in which a collection of non-interacting indistinguishable particles may occupy a set of available discrete energy states, at thermodynamic equilibrium. The aggregation of particles in the same state, which is a characteristic of particles obeying Bose–Einstein statistics, accounts for the cohesive streaming of laser light and the frictionless creeping of superfluid helium. The theory of this behaviour was developed (1924–25) by Satyendra Nath Bose, who recognized that a collection of identical and indistinguishable particles can be distributed in this way. The idea was later adopted and extended by Albert Einstein in collaboration with Bose.

The Bose–Einstein statistics apply only to those particles not limited to single occupancy of the same state—that is, particles that do not obey the Pauli exclusion principle restrictions. Such particles have integer values of spin and are named bosons, after the statistics that correctly describe their behaviour. There must also be no significant interaction between the particles.

Derivation from the grand canonical ensemble

The Bose–Einstein distribution, which applies only to a quantum system of non-interacting bosons, is easily derived from the grand canonical ensemble.[3] In this ensemble, the system is able to exchange energy and exchange particles with a reservoir (temperature T and chemical potential µ fixed by the reservoir).

Due to the non-interacting quality, each available single-particle level (with energy level ϵ) forms a separate thermodynamic system in contact with the reservoir. In other words, each single-particle level is a separate, tiny grand canonical ensemble. With bosons there is no limit on the number of particles N in the level, but due to indistinguishability each possible N corresponds to only one microstate (with energy Nϵ). The resulting partition function for that single-particle level therefore forms a geometric series:

and the average particle number for that single-particle substate is given by

This result applies for each single-particle level and thus forms the Bose–Einstein distribution for the entire state of the system.[4][5]

The variance in particle number (due to thermal fluctuations) may also be derived:

This level of fluctuation is much larger than for distinguishable particles, which would instead show Poisson statistics

發現過程原錯誤,

History

While presenting a lecture at the University of Dhaka on the theory of radiation and the ultraviolet catastrophe, Satyendra Nath Bose intended to show his students that the contemporary theory was inadequate, because it predicted results not in accordance with experimental results. During this lecture, Bose committed an error in applying the theory, which unexpectedly gave a prediction that agreed with the experiment. The error was a simple mistake—similar to arguing that flipping two fair coins will produce two heads one-third of the time—that would appear obviously wrong to anyone with a basic understanding of statistics (remarkably, this error resembled the famous blunder by d’Alembert known from his “Croix ou Pile” Article). However, the results it predicted agreed with experiment, and Bose realized it might not be a mistake after all. For the first time, he took the position that the Maxwell–Boltzmann distribution would not be true for all microscopic particles at all scales. Thus, he studied the probability of finding particles in various states in phase space, where each state is a little patch having volume h3, and the position and momentum of the particles are not kept particularly separate but are considered as one variable.

Bose adapted this lecture into a short article called “Planck’s Law and the Hypothesis of Light Quanta”[1][2] and submitted it to the Philosophical Magazine. However, the referee’s report was negative, and the paper was rejected. Undaunted, he sent the manuscript to Albert Einstein requesting publication in the Zeitschrift für Physik. Einstein immediately agreed, personally translated the article from English into German (Bose had earlier translated Einstein’s article on the theory of General Relativity from German to English), and saw to it that it was published. Bose’s theory achieved respect when Einstein sent his own paper in support of Bose’s to Zeitschrift für Physik, asking that they be published together. This was done in 1924.

The reason Bose produced accurate results was that since photons are indistinguishable from each other, one cannot treat any two photons having equal energy as being two distinct identifiable photons. By analogy, if in an alternate universe coins were to behave like photons and other bosons, the probability of producing two heads would indeed be one-third, and so is the probability of getting a head and a tail which equals one-half for the conventional (classical, distinguishable) coins. Bose’s “error” leads to what is now called Bose–Einstein statistics.

Bose and Einstein extended the idea to atoms and this led to the prediction of the existence of phenomena which became known as Bose–Einstein condensate, a dense collection of bosons (which are particles with integer spin, named after Bose), which was demonstrated to exist by experiment in 1995.

莫非天使遣之說??!!光子處處無不在,白努利數時時現身來 !!??皆住空無妙有宅☆

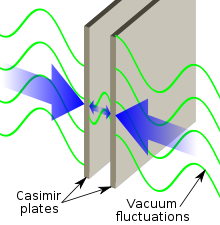

一九四八年時,荷蘭物理學家『亨德里克‧卡西米爾』 Hendrik Casimir 提出了『真空不空』的『議論』。因為依據『量子場論』,『真空』也得有『最低能階』,因此『真空能量』不論因不因其『實虛』粒子之『生滅』,總得有一個『量子態』。由於已知『原子』與『分子』的『主要結合力』是『電磁力』,那麼該『如何』說『真空』之『量化』與『物質』的『實際』是怎麽來『配合』的呢?因此他『計算』了這個『可能效應』之『大小』,然而無論是哪種『震盪』所引起的,他總是得要面臨『無窮共振態』 ![]() 的『問題』,這也就是說『平均』有『多少』各種能量的『光子?』所參與

的『問題』,這也就是說『平均』有『多少』各種能量的『光子?』所參與 ![]() 的『問題』?據知『卡西米爾』用『歐拉』等之『可加法』,得到了

的『問題』?據知『卡西米爾』用『歐拉』等之『可加法』,得到了 ![]() 。

。

此處之『負』 ![]() 代表『吸引力』,而今早也已經『證實』的了,真不知『宇宙』是果真先就有『計畫』的嗎?還是說『人們』自己還在『幻想』的呢??

代表『吸引力』,而今早也已經『證實』的了,真不知『宇宙』是果真先就有『計畫』的嗎?還是說『人們』自己還在『幻想』的呢??

─── 摘自《【Sonic π】電聲學之電路學《四》之《 V!》‧下》

關係一現機鋒出 ![]() 。原來這個白努利數唯一奇

。原來這個白努利數唯一奇 ![]() ,

,![]() ,不假它求數自知

,不假它求數自知 ![]() 。遞迴關係無覓處

。遞迴關係無覓處

![]()

。恰恰此中得

![]()

☆

![{\displaystyle {\begin{aligned}{\mathcal {Z}}&=\sum _{N=0}^{\infty }\exp(N(\mu -\epsilon )/k_{B}T)=\sum _{N=0}^{\infty }[\exp((\mu -\epsilon )/k_{B}T)]^{N}\\&={\frac {1}{1-\exp((\mu -\epsilon )/k_{B}T)}}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bdce034df3ca7555063c4246d8374d6ccd65bd69)

![Rendered by QuickLaTeX.com ipython3 Python 3.4.2 (default, Oct 19 2014, 13:31:11) Type "copyright", "credits" or "license" for more information. IPython 2.3.0 -- An enhanced Interactive Python. ? -> Introduction and overview of IPython's features. %quickref -> Quick reference. help -> Python's own help system. object? -> Details about 'object', use 'object??' for extra details. In [1]: from sympy import * In [2]: init_printing() In [3]: n, x =symbols('n , x') In [4]: 生成函數 = (exp(n*x) - 1)/(exp(x) -1) In [5]: 生成函數 Out[5]: n⋅x ℯ - 1 ──────── x ℯ - 1 In [6]: limit(生成函數, x, 0) Out[6]: n In [7]: 生成函數一階導數 = diff(生成函數, x) In [8]: 生成函數一階導數 Out[8]: n⋅x ⎛ n⋅x ⎞ x n⋅ℯ ⎝ℯ - 1⎠⋅ℯ ────── - ───────────── x 2 ℯ - 1 ⎛ x ⎞ ⎝ℯ - 1⎠ In [9]: limit(生成函數一階導數, x, 0) Out[9]: 2 n n ── - ─ 2 2 In [10]: 生成函數二階導數 = diff(生成函數一階導數, x) In [11]: 生成函數二階導數 Out[11]: 2 n⋅x x n⋅x ⎛ n⋅x ⎞ x ⎛ n⋅x ⎞ 2⋅x n ⋅ℯ 2⋅n⋅ℯ ⋅ℯ ⎝ℯ - 1⎠⋅ℯ 2⋅⎝ℯ - 1⎠⋅ℯ ─────── - ─────────── - ───────────── + ───────────────── x 2 2 3 ℯ - 1 ⎛ x ⎞ ⎛ x ⎞ ⎛ x ⎞ ⎝ℯ - 1⎠ ⎝ℯ - 1⎠ ⎝ℯ - 1⎠ In [12]: limit(生成函數二階導數, x, 0) Out[12]: 3 2 n n n ── - ── + ─ 3 2 6 In [13]: </pre> <span style="color: #003300;">偏偏動手作計算??!!祇為經驗來自過程哩!!??</span> <span style="color: #003300;">為何總遇](http://www.freesandal.org/wp-content/ql-cache/quicklatex.com-c303b95f83c36291cff168c9bd554f31_l3.png) \frac{0}{0}

\frac{0}{0}![Rendered by QuickLaTeX.com ︰</span> <h1 id="firstHeading" class="firstHeading" lang="en"><span style="color: #ff9900;"><a style="color: #ff9900;" href="https://en.wikipedia.org/wiki/Zero_of_a_function">Zero of a function</a></span></h1> <div id="bodyContent" class="mw-body-content"> <div id="siteSub"><span style="color: #808080;">From Wikipedia, the free encyclopedia</span></div> <div id="contentSub"><span class="mw-redirectedfrom" style="color: #808080;"> (Redirected from <a class="mw-redirect" style="color: #808080;" title="Root of a function" href="https://en.wikipedia.org/w/index.php?title=Root_of_a_function&redirect=no">Root of a function</a>)</span></div> <div id="mw-content-text" class="mw-content-ltr" dir="ltr" lang="en"> <div class="thumb tright"> <div class="thumbinner"> <div> <div><a class="image" href="https://en.wikipedia.org/wiki/File:X-intercepts.svg"><img src="https://upload.wikimedia.org/wikipedia/commons/thumb/9/98/X-intercepts.svg/300px-X-intercepts.svg.png" srcset="//upload.wikimedia.org/wikipedia/commons/thumb/9/98/X-intercepts.svg/450px-X-intercepts.svg.png 1.5x, //upload.wikimedia.org/wikipedia/commons/thumb/9/98/X-intercepts.svg/600px-X-intercepts.svg.png 2x" alt="A graph of the function cos(x) on the domain '"`UNIQ--postMath-00000001-QINU`"', with x-intercepts indicated in red. The function has zeroes where x is '"`UNIQ--postMath-00000002-QINU`"', '"`UNIQ--postMath-00000003-QINU`"', '"`UNIQ--postMath-00000004-QINU`"' and '"`UNIQ--postMath-00000005-QINU`"'." width="300" height="300" data-file-width="800" data-file-height="800" /></a></div> </div> <div class="thumbcaption"> <div class="magnify"></div> <span style="color: #999999;">A graph of the function cos(<i>x</i>) on the domain <span class="mwe-math-element"> <img class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/b8e03bf1e49393bd0adf23e73fc71a0256ea9183" alt="\scriptstyle {[-2\pi ,2\pi ]}" /></span>, with <i>x</i>-intercepts indicated in red. The function has <b>zeroes</b> where <i>x</i> is <span class="mwe-math-element"> <img class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/6c3d59f4d6b49fcbf821c20d289a07a165c8fbeb" alt="\scriptstyle {\frac {-3\pi }{2}}" /></span>, <span class="mwe-math-element"> <img class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/65116414d45766470561a9e377704e72ef89ecb9" alt="\scriptstyle {\frac {-\pi }{2}}" /></span>, <span class="mwe-math-element"> <img class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/13eb5861c2ff7a77b4c7da40c74dc6e1730de0f7" alt="\scriptstyle {\frac {\pi }{2}}" /></span> and <span class="mwe-math-element"> <img class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/a83e8c170b496ff819f5734dbbde8da5693a0d85" alt="\scriptstyle {\frac {3\pi }{2}}" /></span>.</span> </div> </div> </div> <span style="color: #808080;">In <a style="color: #808080;" title="Mathematics" href="https://en.wikipedia.org/wiki/Mathematics">mathematics</a>, a <b>zero</b>, also sometimes called a <b>root</b>, of a real-, complex- or generally <a style="color: #808080;" title="Vector-valued function" href="https://en.wikipedia.org/wiki/Vector-valued_function">vector-valued function</a> <i>f</i> is a member <i>x</i> of the <a style="color: #808080;" title="Domain of a function" href="https://en.wikipedia.org/wiki/Domain_of_a_function">domain</a> of <i>f</i> such that <i>f</i>(<i>x</i>) <b>vanishes</b> at <i>x</i>; that is, <i>x</i> is a <a class="mw-redirect" style="color: #808080;" title="Solution (equation)" href="https://en.wikipedia.org/wiki/Solution_%28equation%29">solution</a> of the <a style="color: #808080;" title="Equation" href="https://en.wikipedia.org/wiki/Equation">equation</a></span> <dl> <dd><span class="texhtml" style="color: #808080;"><i>f</i>(<i>x</i>) = 0.</span></dd> </dl> <span style="color: #808080;">In other words, a "zero" of a function is an input value that produces an output of zero (0).<sup id="cite_ref-Foerster_1-0" class="reference"><a style="color: #808080;" href="https://en.wikipedia.org/wiki/Zero_of_a_function#cite_note-Foerster-1">[1]</a></sup></span> <span style="color: #808080;">A <b>root</b> of a <a style="color: #808080;" title="Polynomial" href="https://en.wikipedia.org/wiki/Polynomial">polynomial</a> is a zero of the corresponding <a class="mw-redirect" style="color: #808080;" title="Polynomial function" href="https://en.wikipedia.org/wiki/Polynomial_function">polynomial function</a>. The <a style="color: #808080;" title="Fundamental theorem of algebra" href="https://en.wikipedia.org/wiki/Fundamental_theorem_of_algebra">fundamental theorem of algebra</a> shows that any non-zero <a style="color: #808080;" title="Polynomial" href="https://en.wikipedia.org/wiki/Polynomial">polynomial</a> has a number of roots at most equal to its <a style="color: #808080;" title="Degree of a polynomial" href="https://en.wikipedia.org/wiki/Degree_of_a_polynomial">degree</a> and that the number of roots and the degree are equal when one considers the <a style="color: #808080;" title="Complex number" href="https://en.wikipedia.org/wiki/Complex_number">complex</a> roots (or more generally the roots in an <a class="mw-redirect" style="color: #808080;" title="Algebraically closed extension" href="https://en.wikipedia.org/wiki/Algebraically_closed_extension">algebraically closed extension</a>) counted with their <a style="color: #808080;" title="Multiplicity (mathematics)" href="https://en.wikipedia.org/wiki/Multiplicity_%28mathematics%29">multiplicities</a>. For example, the polynomial <i>f</i> of degree two, defined by</span> <dl> <dd><span class="mwe-math-element" style="color: #808080;"> <img class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/7375440b6aef5c197e3d9ea2d21d4afef996f403" alt="f(x)=x^{2}-5x+6" /></span></dd> </dl> <span style="color: #808080;">has the two roots 2 and 3, since</span> <dl> <dd><span class="mwe-math-element" style="color: #808080;"> <img class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/9f200f566bf0913f6b8d6a4c267fc7874c47fc39" alt="f(2)=2^{2}-5\cdot 2+6=0\quad \textstyle {\rm {and}}\quad f(3)=3^{2}-5\cdot 3+6=0." /></span></dd> </dl> <span style="color: #808080;">If the function maps <a style="color: #808080;" title="Real number" href="https://en.wikipedia.org/wiki/Real_number">real numbers</a> to real numbers, its zeroes are the <i>x</i>-coordinates of the points where its <a style="color: #808080;" title="Graph of a function" href="https://en.wikipedia.org/wiki/Graph_of_a_function">graph</a> meets the <a class="mw-redirect" style="color: #808080;" title="X-axis" href="https://en.wikipedia.org/wiki/X-axis"><i>x</i>-axis</a>. An alternative name for such a point (<i>x</i>,0) in this context is an <b><i>x</i>-intercept</b>.</span> </div> </div> <h2><span id="Solution_of_an_equation" class="mw-headline" style="color: #808080;">Solution of an equation</span></h2> <span style="color: #808080;">Every <a style="color: #808080;" title="Equation" href="https://en.wikipedia.org/wiki/Equation">equation</a> in the <a class="mw-redirect" style="color: #808080;" title="Unknown (mathematics)" href="https://en.wikipedia.org/wiki/Unknown_%28mathematics%29">unknown</a> <span class="texhtml"><i>x</i></span> may be rewritten as</span> <dl> <dd><span class="texhtml" style="color: #808080;"><i>f</i>(<i>x</i>) = 0</span></dd> </dl> <span style="color: #808080;">by regrouping all terms in the left-hand side. It follows that the solutions of such an equation are exactly the zeros of the function <span class="texhtml"><i>f</i></span>. In other words, "zero of a function" is a phrase denoting a "solution of the equation obtained by equating the function to 0", and the study of zeros of functions is exactly the same as the study of solutions of equations.</span> <h2><span id="Polynomial_roots" class="mw-headline" style="color: #808080;">Polynomial roots</span></h2> <div class="hatnote"><span style="color: #808080;">Main article: <a style="color: #808080;" title="Properties of polynomial roots" href="https://en.wikipedia.org/wiki/Properties_of_polynomial_roots">Properties of polynomial roots</a></span></div> <span style="color: #808080;">Every real polynomial of odd <a style="color: #808080;" title="Degree of a polynomial" href="https://en.wikipedia.org/wiki/Degree_of_a_polynomial">degree</a> has an odd number of real roots (counting <a style="color: #808080;" title="Multiplicity (mathematics)" href="https://en.wikipedia.org/wiki/Multiplicity_%28mathematics%29#Multiplicity_of_a_root_of_a_polynomial">multiplicities</a>); likewise, a real polynomial of even degree must have an even number of real roots. Consequently, real odd polynomials must have at least one real root (because one is the smallest odd whole number), whereas even polynomials may have none. This principle can be proven by reference to the <a style="color: #808080;" title="Intermediate value theorem" href="https://en.wikipedia.org/wiki/Intermediate_value_theorem">intermediate value theorem</a>: since polynomial functions are <a style="color: #808080;" title="Continuous function" href="https://en.wikipedia.org/wiki/Continuous_function">continuous</a>, the function value must cross zero in the process of changing from negative to positive or vice versa.</span> <h3><span id="Fundamental_theorem_of_algebra" class="mw-headline" style="color: #808080;">Fundamental theorem of algebra</span></h3> <div class="hatnote"><span style="color: #808080;">Main article: <a style="color: #808080;" title="Fundamental theorem of algebra" href="https://en.wikipedia.org/wiki/Fundamental_theorem_of_algebra">Fundamental theorem of algebra</a></span></div> <span style="color: #808080;">The fundamental theorem of algebra states that every polynomial of degree <i>n</i> has <i>n</i> complex roots, counted with their multiplicities. The non-real roots of polynomials with real coefficients come in <a style="color: #808080;" title="Complex conjugate" href="https://en.wikipedia.org/wiki/Complex_conjugate">conjugate</a> pairs.<sup id="cite_ref-Foerster_1-1" class="reference"><a style="color: #808080;" href="https://en.wikipedia.org/wiki/Zero_of_a_function#cite_note-Foerster-1">[1]</a></sup> <a style="color: #808080;" title="Vieta's formulas" href="https://en.wikipedia.org/wiki/Vieta%27s_formulas">Vieta's formulas</a> relate the coefficients of a polynomial to sums and products of its roots.</span> <span style="color: #003300;">『虛』、『實』分殊言『解析』,『求根』理則道之深︰</span> <h1 id="firstHeading" class="firstHeading" lang="zh-TW"><span style="color: #ff9900;"><a style="color: #ff9900;" href="https://zh.wikipedia.org/zh-tw/%E4%BB%A3%E6%95%B0%E5%9F%BA%E6%9C%AC%E5%AE%9A%E7%90%86">代數基本定理</a></span></h1> <span style="color: #808080;"><b>代數基本定理</b>說明,任何一個一元複係數<a class="mw-redirect" style="color: #808080;" title="方程式" href="https://zh.wikipedia.org/wiki/%E6%96%B9%E7%A8%8B%E5%BC%8F">方程式</a>都至少有一個複數<a style="color: #808080;" title="根 (數學)" href="https://zh.wikipedia.org/wiki/%E6%A0%B9_%28%E6%95%B0%E5%AD%A6%29">根</a>。也就是說,<a class="mw-redirect" style="color: #808080;" title="複數" href="https://zh.wikipedia.org/wiki/%E5%A4%8D%E6%95%B0">複數</a><a class="mw-disambig" style="color: #808080;" title="域" href="https://zh.wikipedia.org/wiki/%E5%9F%9F">域</a>是<a class="mw-redirect" style="color: #808080;" title="代數封閉域" href="https://zh.wikipedia.org/wiki/%E4%BB%A3%E6%95%B0%E5%B0%81%E9%97%AD%E5%9F%9F">代數封閉</a>的。</span> <span style="color: #808080;">有時這個定理表述為:任何一個非零的一元n次複係數多項式,都正好有n個複數根。這似乎是一個更強的命題,但實際上是「至少有一個根」的直接結果,因為不斷把多項式除以它的線性因子,即可從有一個根推出有n個根。</span> <span style="color: #808080;">儘管這個定理被命名為「代數基本定理」,但它還沒有純粹的代數證明,許多數學家都相信這種證明不存在。<sup id="cite_ref-1" class="reference"><a style="color: #808080;" href="https://zh.wikipedia.org/zh-tw/%E4%BB%A3%E6%95%B0%E5%9F%BA%E6%9C%AC%E5%AE%9A%E7%90%86#cite_note-1">[1]</a></sup>另外,它也不是最基本的代數定理;因為在那個時候,代數基本上就是關於解實係數或複係數多項式方程,所以才被命名為代數基本定理。</span> <span style="color: #808080;"><a style="color: #808080;" title="卡爾·弗里德里希·高斯" href="https://zh.wikipedia.org/wiki/%E5%8D%A1%E7%88%BE%C2%B7%E5%BC%97%E9%87%8C%E5%BE%B7%E9%87%8C%E5%B8%8C%C2%B7%E9%AB%98%E6%96%AF">高斯</a>一生總共對這個定理給出了四個證明,其中第一個是在他22歲時(1799年)的博士論文中給出的。高斯給出的證明既有幾何的,也有函數的,還有積分的方法。高斯關於這一<a style="color: #808080;" title="命題" href="https://zh.wikipedia.org/wiki/%E5%91%BD%E9%A2%98">命題</a>的證明方法是去證明其根的<a class="mw-redirect" style="color: #808080;" title="存在性" href="https://zh.wikipedia.org/wiki/%E5%AD%98%E5%9C%A8%E6%80%A7">存在性</a>,開創了關於研究存在性命題的新途徑。</span> <span style="color: #808080;">同時,高次代數方程的求解仍然是一大難題。<a style="color: #808080;" title="伽羅瓦理論" href="https://zh.wikipedia.org/wiki/%E4%BC%BD%E7%BE%85%E7%93%A6%E7%90%86%E8%AB%96">伽羅瓦理論</a>指出,對於一般五次以上的方程,不存在一般的代數解。</span> <span style="color: #003300;">白努利數有其原,既不在分子</span> <span style="color: #003300;">](http://www.freesandal.org/wp-content/ql-cache/quicklatex.com-9831e4e0f966ea4e27b5d111ce098874_l3.png) e^{nx} - 1 = \sum \limits_{k=1}^{\infty} \frac{{(nx)}^k}{k !}

e^{nx} - 1 = \sum \limits_{k=1}^{\infty} \frac{{(nx)}^k}{k !}